What's DeepSeek?

페이지 정보

본문

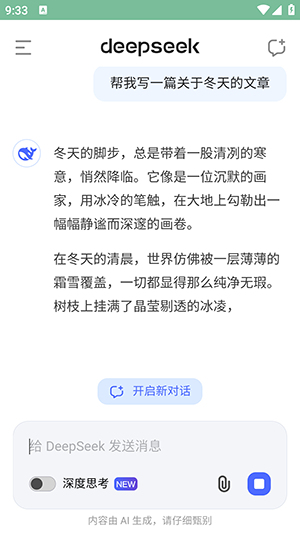

Deepseek AI will help with it. The DeepSeek chatbot defaults to utilizing the DeepSeek-V3 mannequin, however you'll be able to change to its R1 model at any time, by merely clicking, or tapping, the 'DeepThink (R1)' button beneath the prompt bar. Whether you’re using it for research, artistic writing, or enterprise automation, DeepSeek-V3 affords superior language comprehension and contextual awareness, making AI interactions really feel more pure and intelligent. Making sense of your knowledge shouldn't be a headache, no matter how huge or small your organization is. Update: An earlier version of this story implied that Janus-Pro fashions may only output small (384 x 384) photographs. We keep updating every new version of this, so for the following update you'll be able to go to us once more. All these settings are one thing I'll keep tweaking to get the best output and I'm also gonna keep testing new models as they become obtainable. 0.Fifty five per mission enter tokens and $2.19 per million output tokens. It ought to run in pyscript." Once again, the distinction in output was stark. Deepseek AI vs ChatGPT: What is the Difference? To advance its growth, DeepSeek has strategically used a mix of capped-pace GPUs designed for the Chinese market and a substantial reserve of Nvidia A100 chips acquired before recent sanctions.

Microsoft and Alphabet shares fell ahead of the market opening. On January 27, 2025, major tech firms, including Microsoft, Meta, Nvidia, and Alphabet, collectively lost over $1 trillion in market worth. By January 27, 2025, DeepSeek overtook ChatGPT as probably the most downloaded free app on the iOS App Store in the USA, resulting in an 18% drop in Nvidia’s share worth. R1. Launched on January 20, R1 rapidly gained traction, leading to a drop in Nasdaq a hundred futures as Silicon Valley took discover. The AI agency turned heads in Silicon Valley with a research paper explaining the way it constructed the model. This price disparity has sparked what Kathleen Brooks, analysis director at XTB, calls an "existential disaster" for U.S. DeepSeek AI’s resolution to open-source both the 7 billion and 67 billion parameter versions of its models, including base and specialised chat variants, aims to foster widespread AI research and commercial functions. All skilled reward fashions were initialized from Chat (SFT). Our ultimate solutions had been derived via a weighted majority voting system, the place the answers had been generated by the policy model and the weights have been decided by the scores from the reward mannequin.

Microsoft and Alphabet shares fell ahead of the market opening. On January 27, 2025, major tech firms, including Microsoft, Meta, Nvidia, and Alphabet, collectively lost over $1 trillion in market worth. By January 27, 2025, DeepSeek overtook ChatGPT as probably the most downloaded free app on the iOS App Store in the USA, resulting in an 18% drop in Nvidia’s share worth. R1. Launched on January 20, R1 rapidly gained traction, leading to a drop in Nasdaq a hundred futures as Silicon Valley took discover. The AI agency turned heads in Silicon Valley with a research paper explaining the way it constructed the model. This price disparity has sparked what Kathleen Brooks, analysis director at XTB, calls an "existential disaster" for U.S. DeepSeek AI’s resolution to open-source both the 7 billion and 67 billion parameter versions of its models, including base and specialised chat variants, aims to foster widespread AI research and commercial functions. All skilled reward fashions were initialized from Chat (SFT). Our ultimate solutions had been derived via a weighted majority voting system, the place the answers had been generated by the policy model and the weights have been decided by the scores from the reward mannequin.

DeepSeek-V2, a normal-objective textual content- and image-analyzing system, performed nicely in numerous AI benchmarks - and was far cheaper to run than comparable fashions on the time. You'll be able to ask it to search the net for related info, decreasing the time you'll have spent searching for it yourself. If in case you have a GPU (RTX 4090 for instance) with 24GB, you can offload a number of layers to the GPU for sooner processing. For smaller models (7B, 16B), a strong client GPU like the RTX 4090 is sufficient. This mannequin presents comparable efficiency to superior fashions like ChatGPT o1 however was reportedly developed at a much lower cost. DeepSeek's R1 is designed to rival OpenAI's ChatGPT o1 in several benchmarks whereas operating at a considerably decrease price. While the enormous Open AI model o1 charges $15 per million tokens. While DeepSeek and OpenAI's models look fairly comparable, there are some tweaks that set them apart. As DeepSeek develops AI, firms are rethinking their strategies and investments. DeepSeek has tailored its strategies to beat challenges posed by US export controls on superior GPUs.

DeepSeek-V2, a normal-objective textual content- and image-analyzing system, performed nicely in numerous AI benchmarks - and was far cheaper to run than comparable fashions on the time. You'll be able to ask it to search the net for related info, decreasing the time you'll have spent searching for it yourself. If in case you have a GPU (RTX 4090 for instance) with 24GB, you can offload a number of layers to the GPU for sooner processing. For smaller models (7B, 16B), a strong client GPU like the RTX 4090 is sufficient. This mannequin presents comparable efficiency to superior fashions like ChatGPT o1 however was reportedly developed at a much lower cost. DeepSeek's R1 is designed to rival OpenAI's ChatGPT o1 in several benchmarks whereas operating at a considerably decrease price. While the enormous Open AI model o1 charges $15 per million tokens. While DeepSeek and OpenAI's models look fairly comparable, there are some tweaks that set them apart. As DeepSeek develops AI, firms are rethinking their strategies and investments. DeepSeek has tailored its strategies to beat challenges posed by US export controls on superior GPUs.

We evaluate our model on LiveCodeBench (0901-0401), a benchmark designed for live coding challenges. AI observer Shin Megami Boson confirmed it as the highest-performing open-supply model in his personal GPQA-like benchmark. The DeepSeek-V2 mannequin introduced two vital breakthroughs: DeepSeekMoE and DeepSeekMLA. So, if you have two quantities of 1, combining them provides you a complete of 2. Yeah, that appears right. Training information: In comparison with the unique DeepSeek-Coder, DeepSeek-Coder-V2 expanded the coaching information significantly by including a further 6 trillion tokens, growing the total to 10.2 trillion tokens. DeepSeek: Developed by the Chinese AI firm DeepSeek, the DeepSeek-R1 model has gained important attention attributable to its open-source nature and environment friendly training methodologies. DeepSeek, a Chinese AI lab, has caused a stir within the U.S. DeepSeek's R1 Shakes Up the U.S. DeepSeek’s language fashions, which have been skilled utilizing compute-efficient techniques, have led many Wall Street analysts - and technologists - to query whether the U.S. However, with LiteLLM, utilizing the identical implementation format, you should utilize any mannequin supplier (Claude, Gemini, Groq, Mistral, Azure AI, Bedrock, etc.) as a drop-in replacement for OpenAI models. With this, you'll be able to produce skilled looking photos without the need of an expensive studio.

If you have any kind of concerns relating to where and the best ways to utilize شات DeepSeek, you can call us at our web site.

- 이전글10 Upvc Door Panels-related projects to stretch Your Creativity 25.02.13

- 다음글Little Known Ways to Mozrank Checker 25.02.13

댓글목록

등록된 댓글이 없습니다.